learning with ai: closing the loop with clawdbot

what is it that makes learning difficult? or inefficient? i’d say it’s mostly due to the consumption of the wrong material. if it’s too easy, you don’t progress as fast as you could and get bored, if it’s too hard, well, you improve slowly and sometimes you quit. being exposed to the right material, slightly above your level, is what makes it fun, rewarding and sustainable.

i think a day may come where ai will grasp exactly where a person’s at, and will challenge her with the perfect material to stimulate fast-paced growth, making learning universally fun for all humans. obviously we’re not there yet, but the recent surge of clawdbot spawned an idea.

these past few months, i’ve been using grok a lot to question and challenge x posts in my feed. i’m talking about the @grok account you can tag in a thread. i like the condensed format of the responses and the fact that people can read your discussion with the ai. sometimes, even the x post author will pop in. grok has been teaching me about biology and finance, answering specific questions that would otherwise take much longer to resolve.

while i agree getting answers almost instantly might not always promote knowledge cementing as much as the manual process of looking for an answer, overall, we do get a higher number of answers and benefit from an increased iteration speed on ideas.

the problem is grok starts from a blank state for every new question i ask him. he doesn’t have any context on my learning goals, he doesn’t know where i’m at, so the answer material he produces often misses the mark, especially in biology. that’s where clawdbot comes in: a nice wrapper of cron jobs (scheduling) and memory management (text files).

here’s my attempt at turning clawdbot into the perfect ai teacher, every day he:

- pulls last five x threads i’ve discussed with grok.

- maintains a map of my knowledge divided in three sections: gaps, learning, mastered.

- sends me a quiz of 8 questions: 4 review questions (gaps/learning), 4 new questions derived from my x activity.

- gives feedback and updates knowledge map.

there’s a bit of prompt engineering involved. for the quiz elaboration part, he’s tasked to: aim for deep understanding, not recall; ask why/how, not just what; probe mechanisms, connections and implications. as for the update of the knowledge map, a couple guidelines ensure topics only get promoted once understanding is clear.

so far i’m happy with the quizzes even though he tends to overestimate my level. as the knowledge base grows, i expect the ai to pinpoint with increasing accuracy where i’m at.

this is just an mvp, there’s work to do on memory management and many ways to improve the learning experience, like having an image generation model illustrate concepts.

i’m not providing any code so if you want to replicate this, give this post to a state of the art model :)

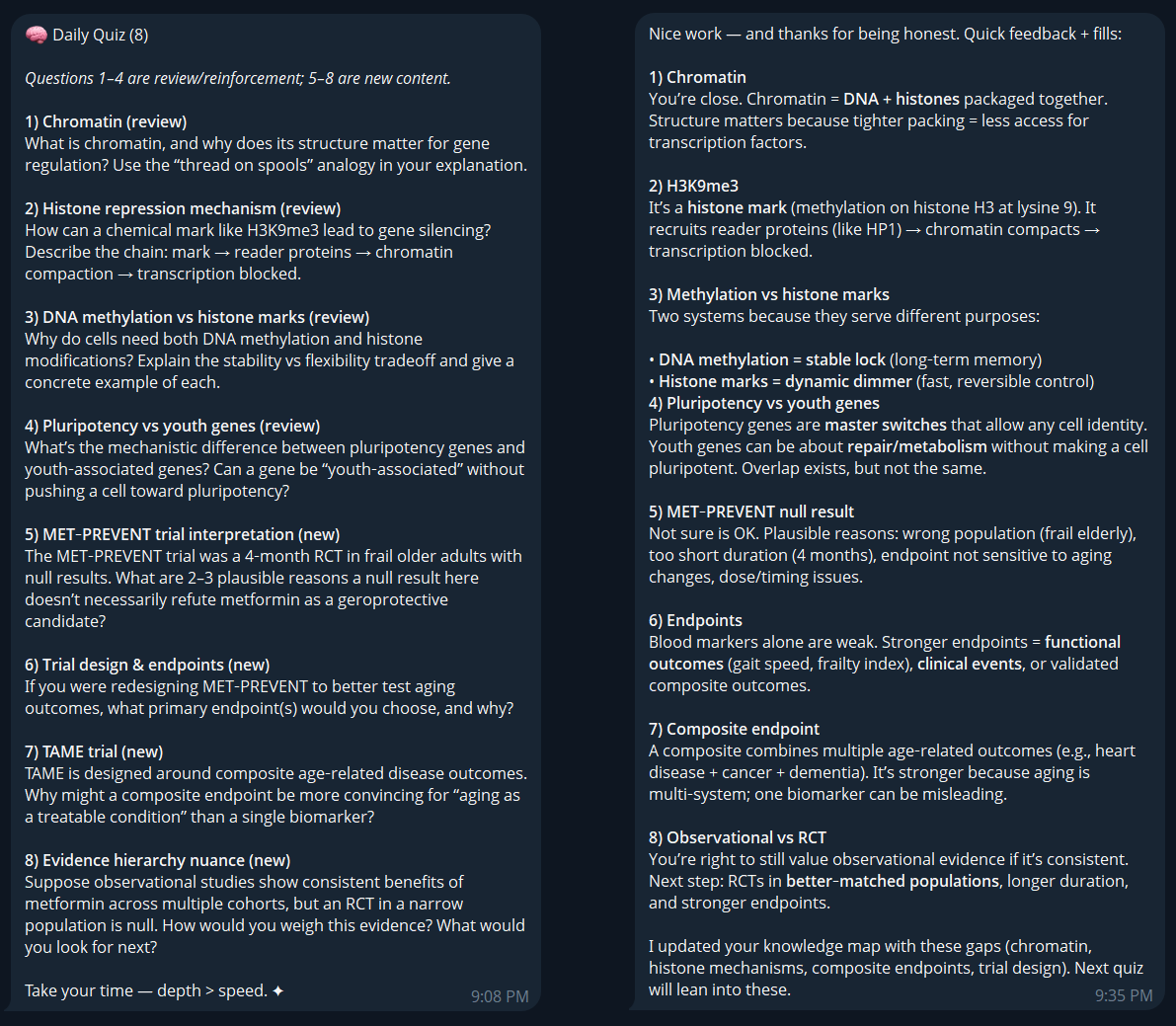

however i’ll provide today’s quiz to show what it looks like: